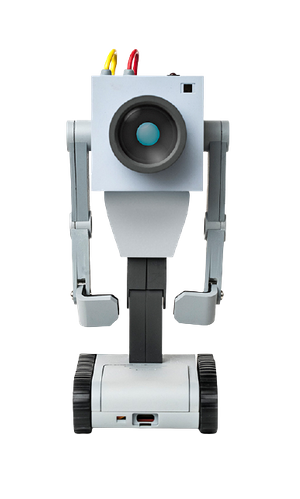

The Butter Robot

Background

“From the Rick and Morty universe, the Butter Robot is a nearly-sentient machine, originally created for the sole purpose of passing butter. With life-like movements and trademark voice, Butter Robot's complex “emotion engine” creates unique interactions and personality as it struggles to find a purpose.”

The Butter Robot is a project that I was working on at Digital Dream Labs (DDL). After the success with Vector and Cozmo, DDL landed a project from Adult Swim to bring the Butter Robot to life. We were all a big fan of the show already so it was very exciting for us to work on the robot. This robot would also be the first robot that was started completely by DDL. When the project was first announced, I was still the lead programmer on the untitled music game. Because of the scope of this new project, we had to pause production and reallocate resources.

I was very excited to be one of the few people actively working on the Butter Robot. Joining the project early gave me the opportunity to be a part of the initial design.

The Butter Robot is a project that I was working on at Digital Dream Labs (DDL). After the success with Vector and Cozmo, DDL landed a project from Adult Swim to bring the Butter Robot to life. We were all a big fan of the show already so it was very exciting for us to work on the robot. This robot would also be the first robot that was started completely by DDL. When the project was first announced, I was still the lead programmer on the untitled music game. Because of the scope of this new project, we had to pause production and reallocate resources.

I was very excited to be one of the few people actively working on the Butter Robot. Joining the project early gave me the opportunity to be a part of the initial design.

Design and Implementation

Foundation

It was important that everyone on the team had seen the show, or at least the particular episode in which Butter Robot appears before we could plot the important bullet points we wanted in the robot. We wanted to replicate the robot’s behavior from the show as much as we could. We brainstormed together and figured out that the most important and obvious part was passing the butter. Apart from that, what struck us the most is the existential crisis the robot was showing in the episode. We have seen many robots come to life, but they are always depicted as smart machines that would eventually replace humans. We wanted to create a robot that would show emotions like existential crisis, sentience, and sass, something that would be more relatable to humans.

Features

Combinely, we all started brainstorming on the list of the essential features.

- Voice

First things first, we had to replicate the same voice that Butter Robot uses in the show. I took the lead on this feature. Luckily for me, we found out through Justin Roiland, the creator of Rick and Morty, that the Butter Robot voice was created using an open-source engine called Flite. There was still a lot of post-processing involved, but I was able to replicate that just by adjusting frequency and implementing time domain harmonic scaling to not let frequency affect the speed of the robot’s voice. I spun up a web-server to deploy my work, but we realized that it could cause a lot of bandwidth related limitations after production. So, I built the Flite engine and the post-processing files as Android and iOS libraries to handle the functionality locally.

- Emotion Engine

- I took the lead on this feature as well. Working on the emotion engine was one of the best parts of the project. The engine defined the personality of the robot depending on its surroundings, how often you use it, how you treat it, etc. The emotion engine could also be defined as the brain of the robot as it would dictate what the robot would say or do at a particular time. We brainstormed on the things that would influence the robot’s emotions and came up with a list of triggers. The list included petting the robot, weather information, low battery, surrounding sound levels, being picked up, having a blocked camera, fall, recognize cozmo or vector, etc. The emotion engine consists of different waves, where each wave represents one of the moods - happy, sad, angry, bored. Each wave will have its own baseline algorithm that would dictate how the robot will behave at a particular time, given that it has no external influences. These waves are then spiked by their relevant triggers, which would then normalize back to the baseline linearly over a certain period of time. At any given point, the wave with the highest point value will be considered as the “mood” of the robot

- Garbage Brain

- Very closely related to the emotion engine, Garbage Brain is the AI that generates unique sentences for the Butter Robot based on its mood. I led the technology design decisions of this module, and collabed together with the cloud team for implementation. It was my first time using Go, so it was a great experience learning it, and I truly believe that Go is the future of cloud systems, if not the present. We first implemented Markov Chains to generate sentences. The sentences Markov Chains generated made sense gramatically, but were not cohesive enough. While it felt like it was on brand with the robot’s personality, it would be a much better user experience if the robot was clever and witty. With the help of systems, I spun up a cloud server and setup OpenAI’s GPT-2, which resulted in much better results. Remember that this was before the recent ChatGPT boom, so I feel proud of getting on the train a little earlier than most people. We trained it with our own lines and the robot made much more sense and would spit out hilarious lines.

- In the video above, at 0:29 mark, Butter Robot implies that the most worrying repercussion for a robot killing all humans, is that the robot's life insurance policy wouldn't hold up. There are many such clever and funny lines, but I will wait for the product to hit the shelves before sharing those. We shared a demo with Justin and he was very happy with the results.

- Mobile App

The main purpose of the application is to interface with the firmware. The app was created completely from scratch in Unity. It uses Protobuf messages to send and receive data from the firmware via Wifi. My main contributions to the app were implementing the emotion engine, integrating Flite engine plugin by building it for mobile architecture, integrating post processing plugins, and integrating a framework to talk to Garbage Brain.

- Direct Control Mode

- Coding Mode

- Passing Mode

In passing mode, the robot will finally do what it is meant to do- Pass the butter! Using the camera feed, the robot will look for fiducials and identify the butter tray. Upon finding the tray, the robot will create a map and using audio cues from the user, try to deliver the tray to where the audio comes from. Because of hardware limitations, this mode has gone through a lot of design changes and iterations, and is hence yet to be implemented.

This is a functionality included in the mobile app that lets user steer the robot using UI joysticks. The user can also control the robot’s arms and hands, and try to pass the butter by themselves. They can also use this to get a live camera feed of the robot, play pre-loaded animations, etc.

While this has not yet been implemented, coding mode would let users visually program the robot using drag and drop UI elements. This will allow users to program their favorites scenes from the show, or be creative and create new scenarios.

Conclusion

DDL currently makes two companion robots, Vector and Cozmo. The biggest difference between the two is that Vector will listen to commands, and Cozmo can be fully programmed and controlled just by using your phone. We wanted Butter Robot to have the best of both worlds, so being able to control the robot as well as giving the robot its own personality is what we set our foundation upon. The Butter Robot was set to release in October 2022, but unfortunately went through development issues. Still, I am very proud to have worked as one of the key members on this project. More information on the website: https://thebutterrobot.com/